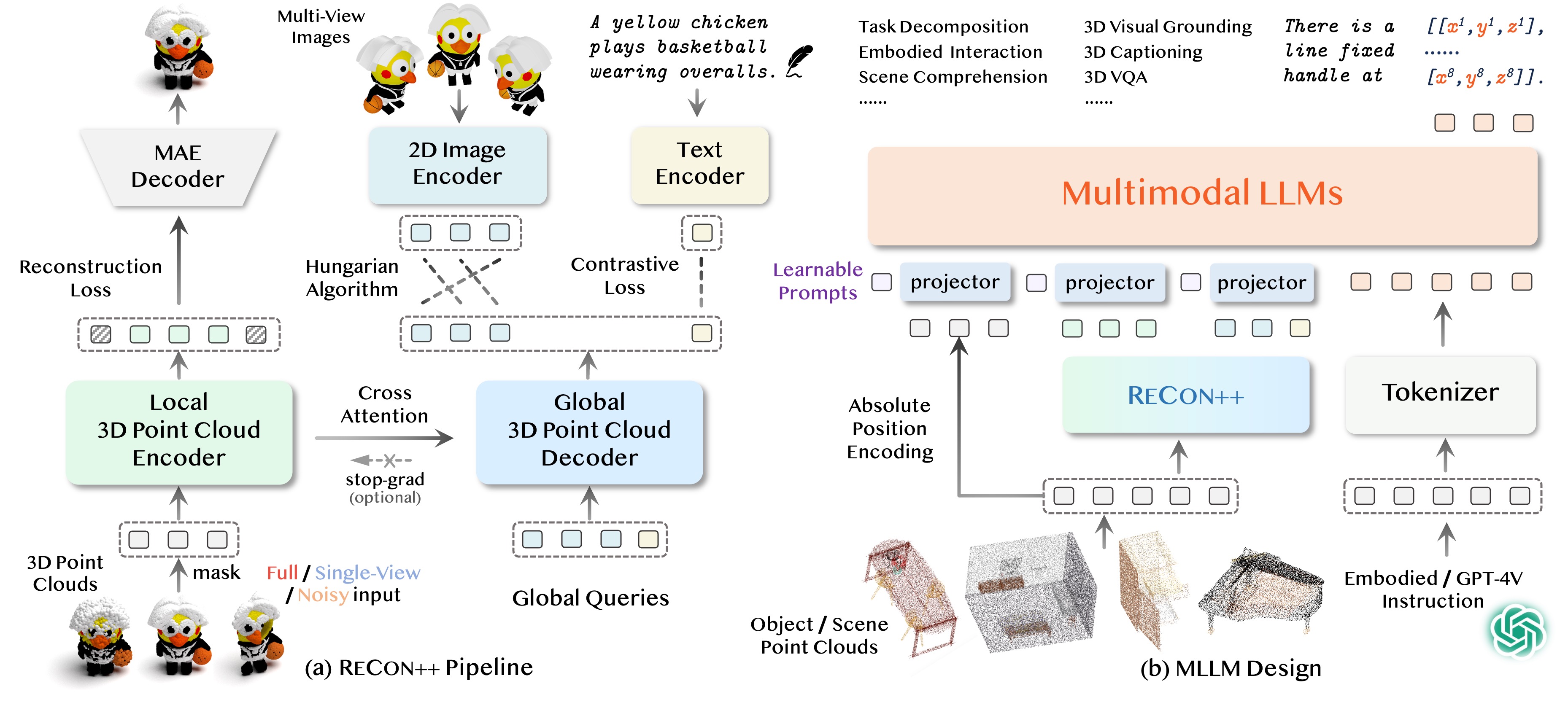

Pipeline

S

h

a

p

e

L

L

M

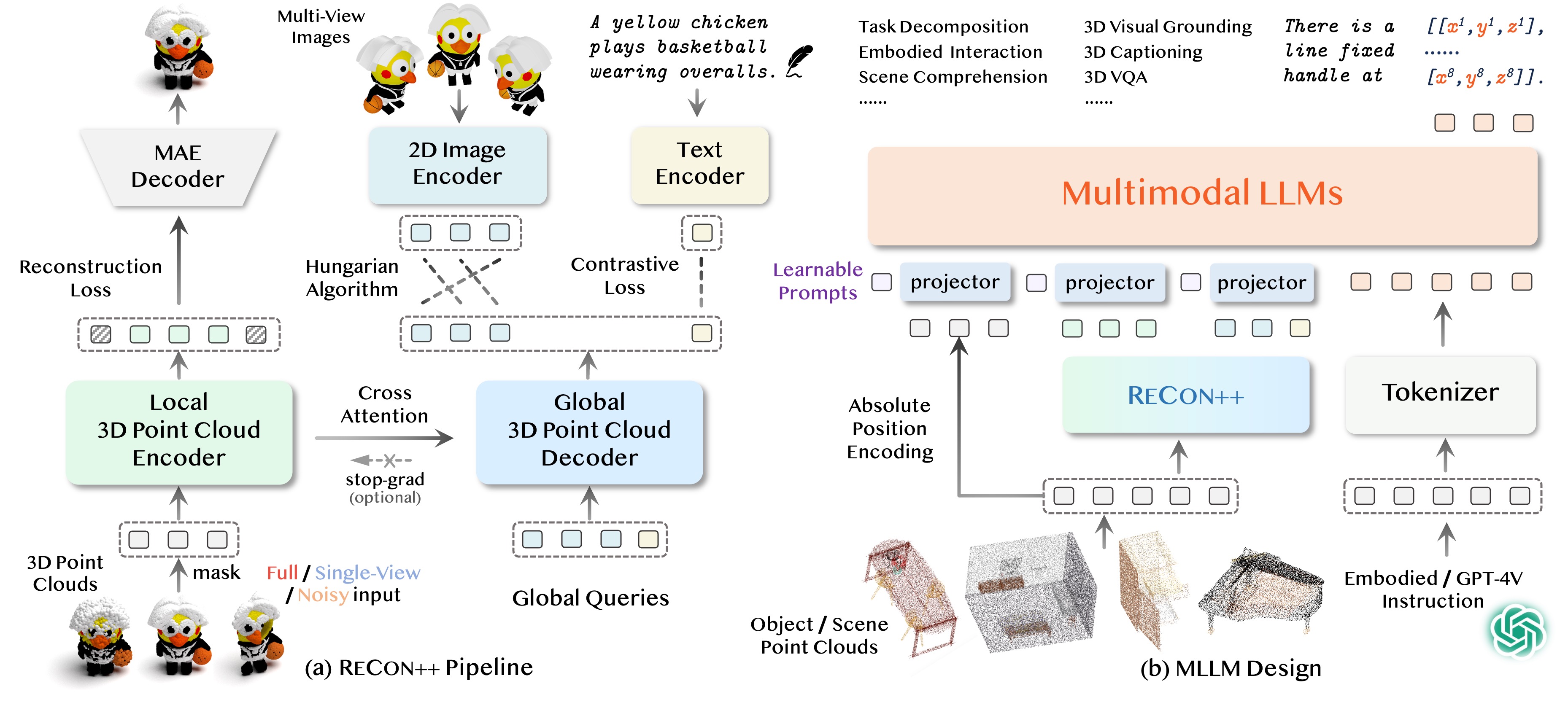

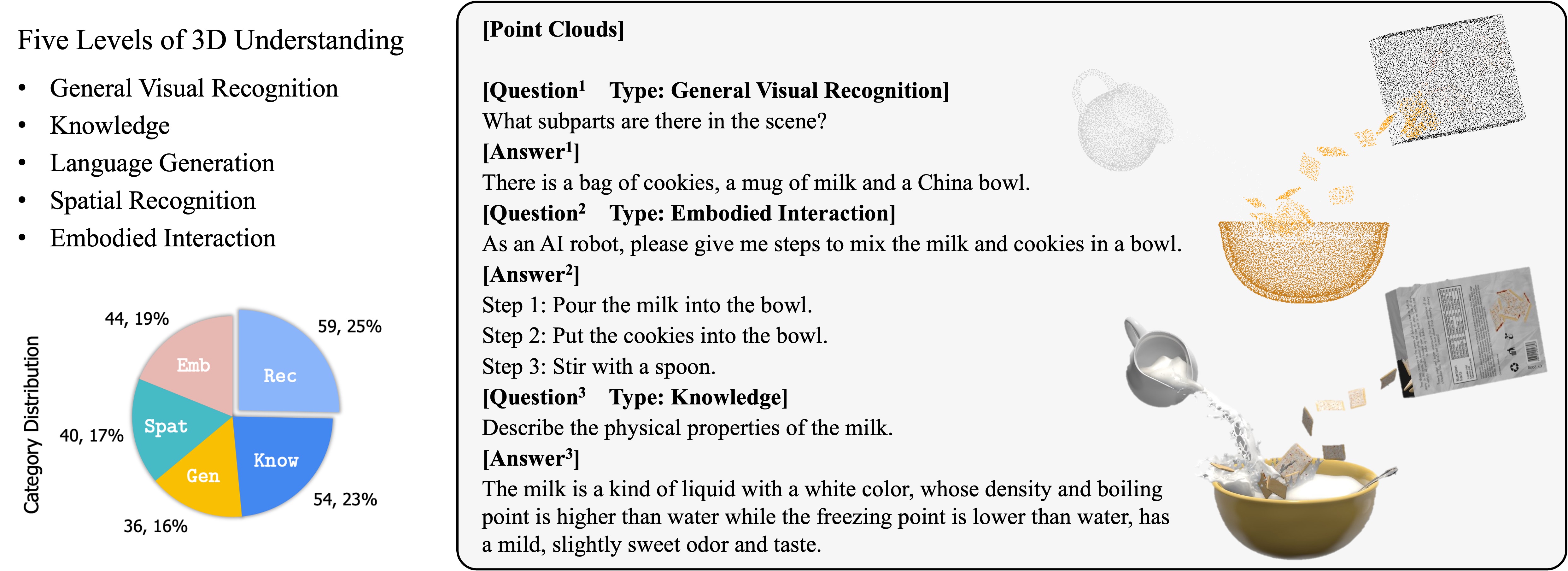

Universal 3D Object Understanding for Embodied Interaction ECCV 2024

@article{qi2024shapellm,

author = {Qi, Zekun and Dong, Runpei and Zhang, Shaochen and Geng, Haoran and Han, Chunrui and Ge, Zheng and Yi, Li and Ma, Kaisheng},

title = {ShapeLLM: Universal 3D Object Understanding for Embodied Interaction},

journal = {arXiv preprint arXiv:2402.17766},

year = {2024},

}