|

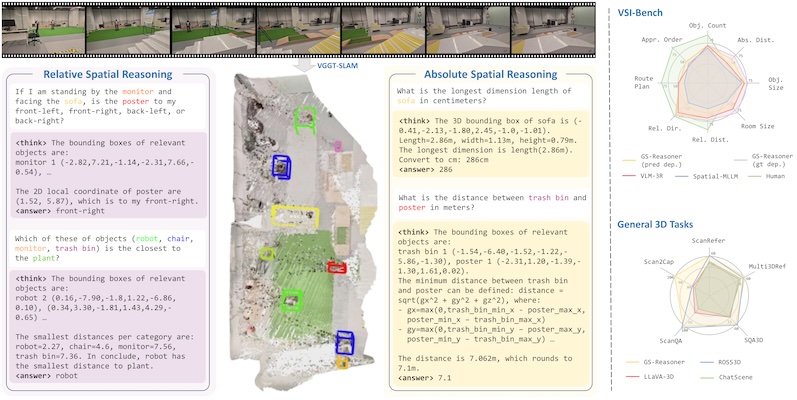

Yiming Chen, Zekun Qi, Wenyao Zhang, Xin Jin, Li Zhang, Peidong Liu International Conference on Learning Representations (ICLR), 2026 [arXiv] [Project Page] [Code] [HuggingFace] We believe that grounding can be seen as a chain-of-thought for spatial reasoning. Based on this, we achieve a new SOTA performance on VSI-Bench. |

|

Mengdi Jia*, Zekun Qi*, Shaochen Zhang, Wenyao Zhang, Xinqiang Yu, Jiawei He, He Wang, Li Yi International Conference on Learning Representations (ICLR), 2026 [arXiv] [Project Page] [Code] [Huggingface] Based on cognitive psychology, we introduce a comprehensive and complex spatial reasoning benchmark, including 50 detailed categories and 1.5K manual labeled QA pairs. |

|

|

Tianyu Xu*, Jiawei Chen*, Jiazhao Zhang*, Wenyao Zhang, Zekun Qi, Minghan Li, Zhizheng Zhang, He Wang ArXiv Preprint, 2025 [arXiv] [Project Page] We present MM-Nav a multi-view VLA system with 360° perception. The model is trained on large-scale expert navigation data collected from multiple reinforcement learning agents, demonstrating robust generalization in complex navigation scenarios. |

|

|

Zekun Qi*, Wenyao Zhang*, Yufei Ding*, Runpei Dong, Xinqiang Yu, Jingwen Li, Lingyun xu, Baoyu Li, Xialin He, Guofan Fan, Jiazhao Zhang, Jiawei He, Jiayuan Gu, Xin Jin, Kaisheng Ma, Zhizheng Zhang, He Wang, Li Yi Conference on Neural Information Processing Systems (NeurIPS), 2025, Spotlight [arXiv] [Project Page] [Code] [Huggingface] We introduce the concept of semantic orientation, representing the object orientation condition on open vocabulary language. |

|

|

Wenyao Zhang*, Hongsi Liu*, Zekun Qi*, Yunnan Wang*, Xinqiang Yu, Jiazhao Zhang, Runpei Dong, Jiawei He, He Wang, Zhizheng Zhang, Li Yi, Wenjun Zeng, Xin Jin Conference on Neural Information Processing Systems (NeurIPS), 2025 [arXiv] [Project Page] [Code] We recast the vision–language–action model as a perception–prediction–action model and make the model explicitly predict a compact set of dynamic, spatial and high- level semantic information, supplying concise yet comprehensive look-ahead cues for planning. |

|

|

Jiawei He*, Danshi Li*, Xinqiang Yu*, Zekun Qi, Wenyao Zhang, Jiayi Chen, Zhaoxiang Zhang, Zhizheng Zhang, Li Yi, He Wang International Conference on Computer Vision (ICCV), 2025, Highlight [arXiv] [Project Page] [Code] We introduce DexVLG, a vision‑language‑grasp model trained on the 170M‑pose, 174k‑object dataset that can generate instruction‑aligned dexterous grasp poses and achieves SOTA success and part‑grasp accuracy. |

|

|

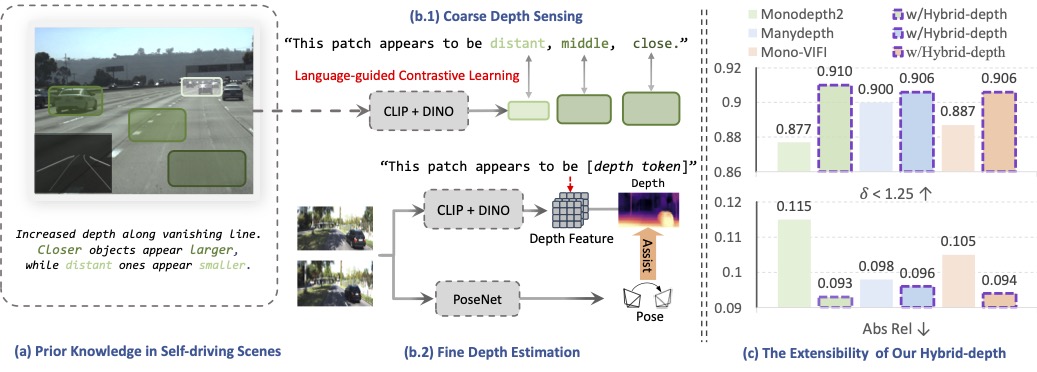

Wenyao Zhang, Hongsi Liu, Bohan Li, Jiawei He, Zekun Qi, Yunnan Wang, Shengyang Zhao, Xinqiang Yu, Wenjun Zeng, Xin Jin International Conference on Computer Vision (ICCV), 2025 [arXiv] We introduce Hybrid‑depth, a self‑supervised method that aligns hybrid semantics via language guided fusion, achieving SOTA accuracy on KITTI and boosting downstream perception. |

|

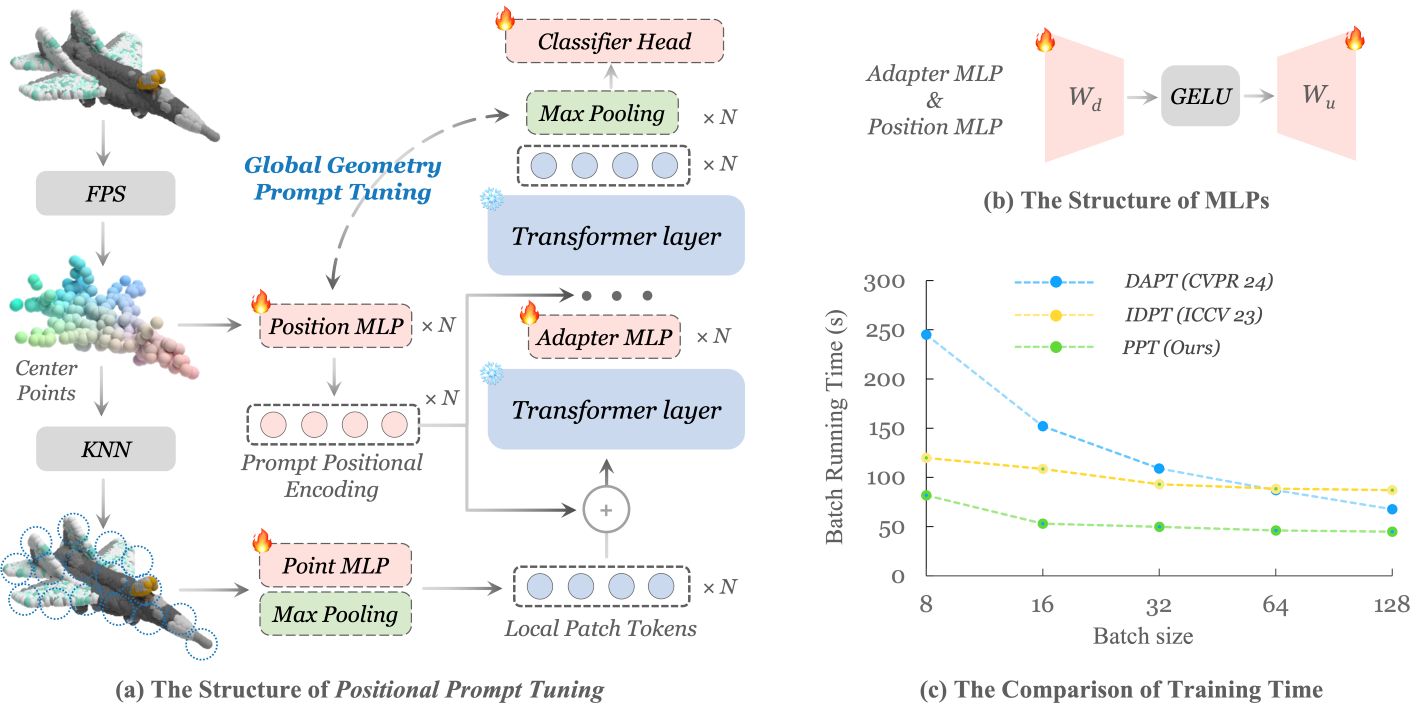

Shaochen Zhang*, Zekun Qi*, Runpei Dong, Xiuxiu Bai, Xing Wei ACM International Conference on Multimedia (ACMMM), 2025, Oral [arXiv] [Code] We rethink the role of positional encoding in 3D representation learning, and propose Positional Prompt Tuning, a simple but efficient method for transfer learning. |

|

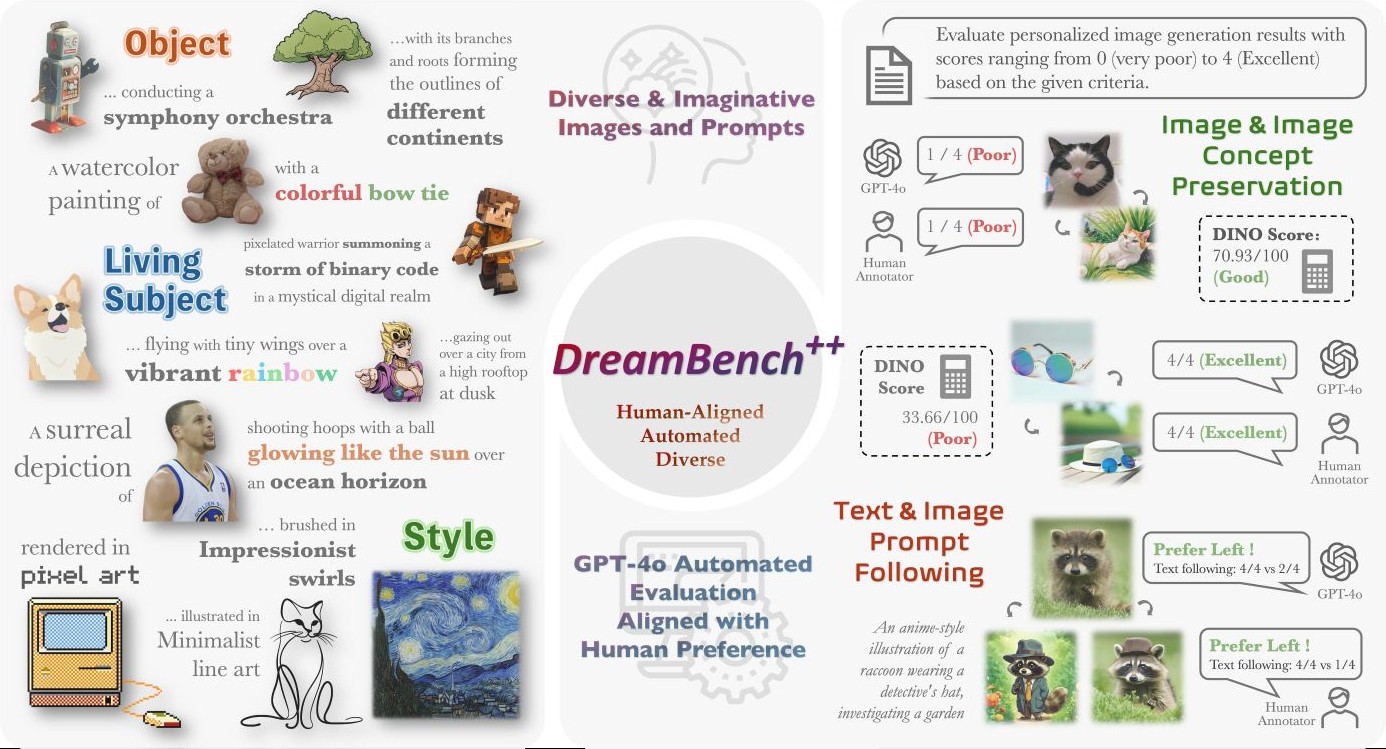

Yuang Peng*, Yuxin Cui*, Haomiao Tang*, Zekun Qi, Runpei Dong, Jing Bai, Chunrui Han, Zheng Ge, Xiangyu Zhang, Shu-Tao Xia International Conference on Learning Representations (ICLR), 2025 [arXiv] [Project Page] [Code] We collect diverse images and prompts, and utilize GPT-4o for automated evaluation aligned with human preference. |

|

Zekun Qi, Runpei Dong, Shaochen Zhang, Haoran Geng, Chunrui Han, Zheng Ge, Li Yi, Kaisheng Ma European Conference on Computer Vision (ECCV), 2024 [arXiv] [Project Page] [Code] [Huggingface] We present ShapeLLM, the first 3D Multimodal Large Language Model designed for embodied interaction, exploring a universal 3D object understanding with 3D point clouds and languages. |

|

|

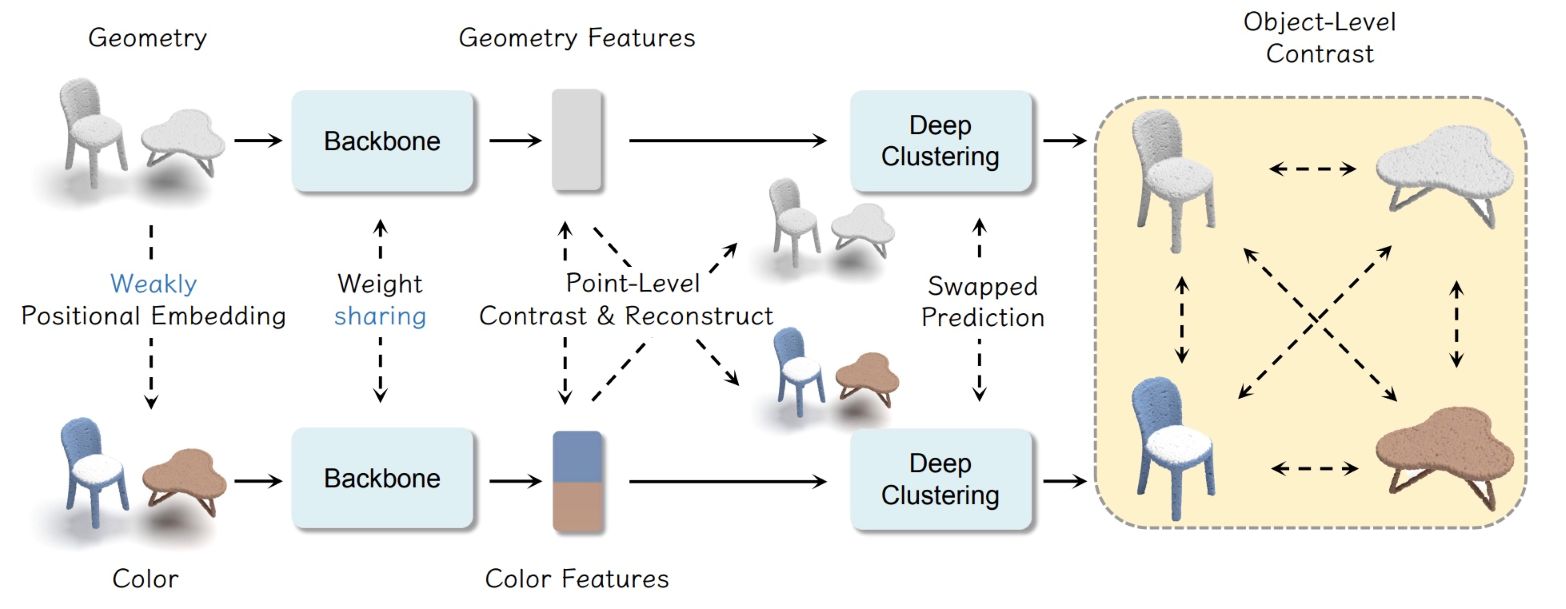

Guofan Fan, Zekun Qi, Wenkai Shi, Kaisheng Ma ACM International Conference on Multimedia (ACMMM), 2024 [arXiv] [Code] We enhance the utilization of color information to improve 3D scene self-supervised learning. |

|

Runpei Dong*, Chunrui Han*, Yuang Peng, Zekun Qi, Zheng Ge, Jinrong Yang, Liang Zhao, Jianjian Sun, Hongyu Zhou, Haoran Wei, Xiangwen Kong, Xiangyu Zhang, Kaisheng Ma, Li Yi International Conference on Learning Representations (ICLR), 2024, Spotlight [arXiv] [Project Page] [Code] [Huggingface] We present DreamLLM, a learning framework that first achieves versatile Multimodal Large Language Models empowered with frequently overlooked synergy between multimodal comprehension and creation. |

|

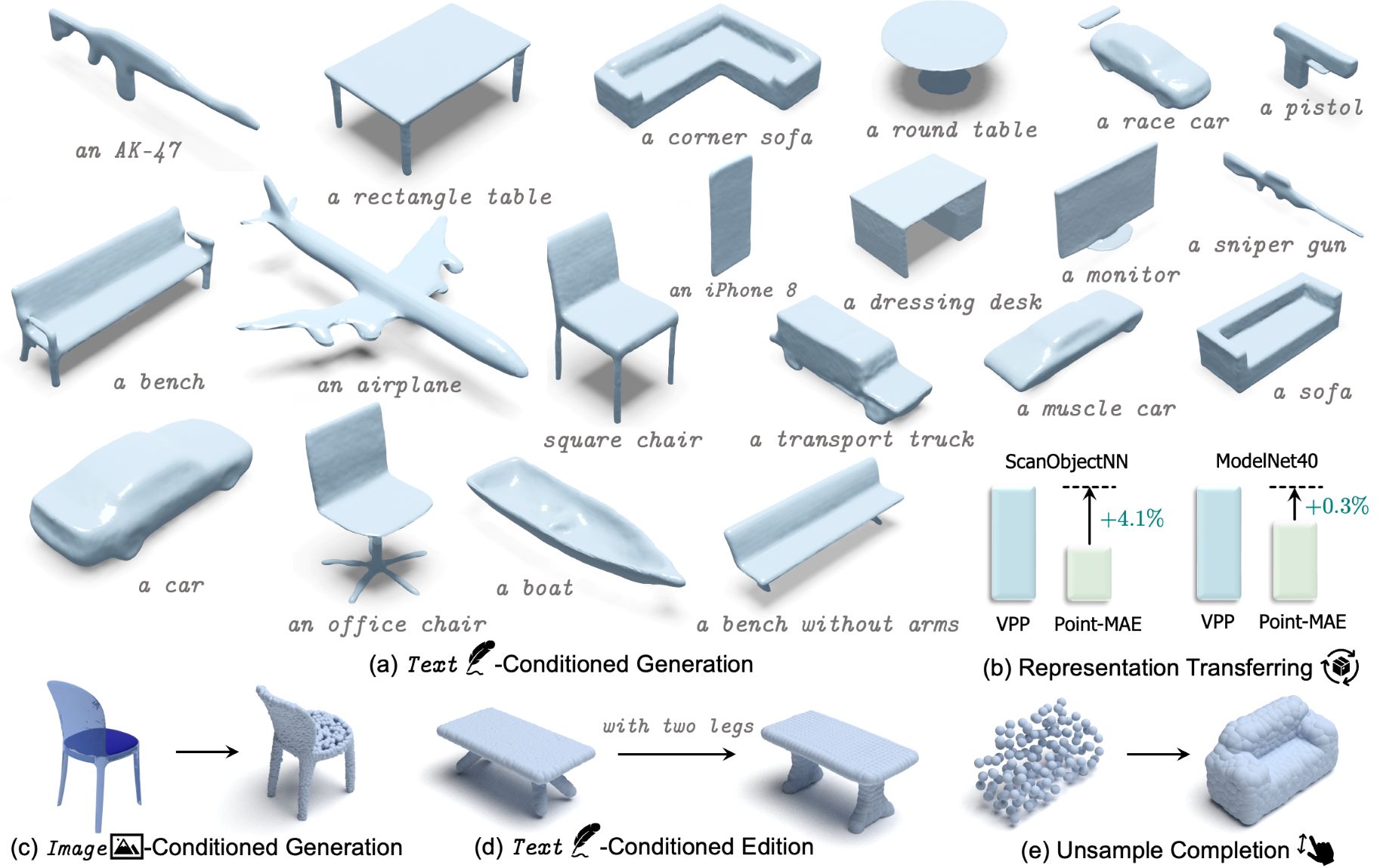

Zekun Qi*, Muzhou Yu*, Runpei Dong, Kaisheng Ma Conference on Neural Information Processing Systems (NeurIPS), 2023 [arXiv] [Code] [OpenReview] We achieve rapid, multi-category 3D conditional generation by sharing the merits of different representations. VPP can generate 3D shapes less than 0.2s using a single RTX 2080Ti. |

|

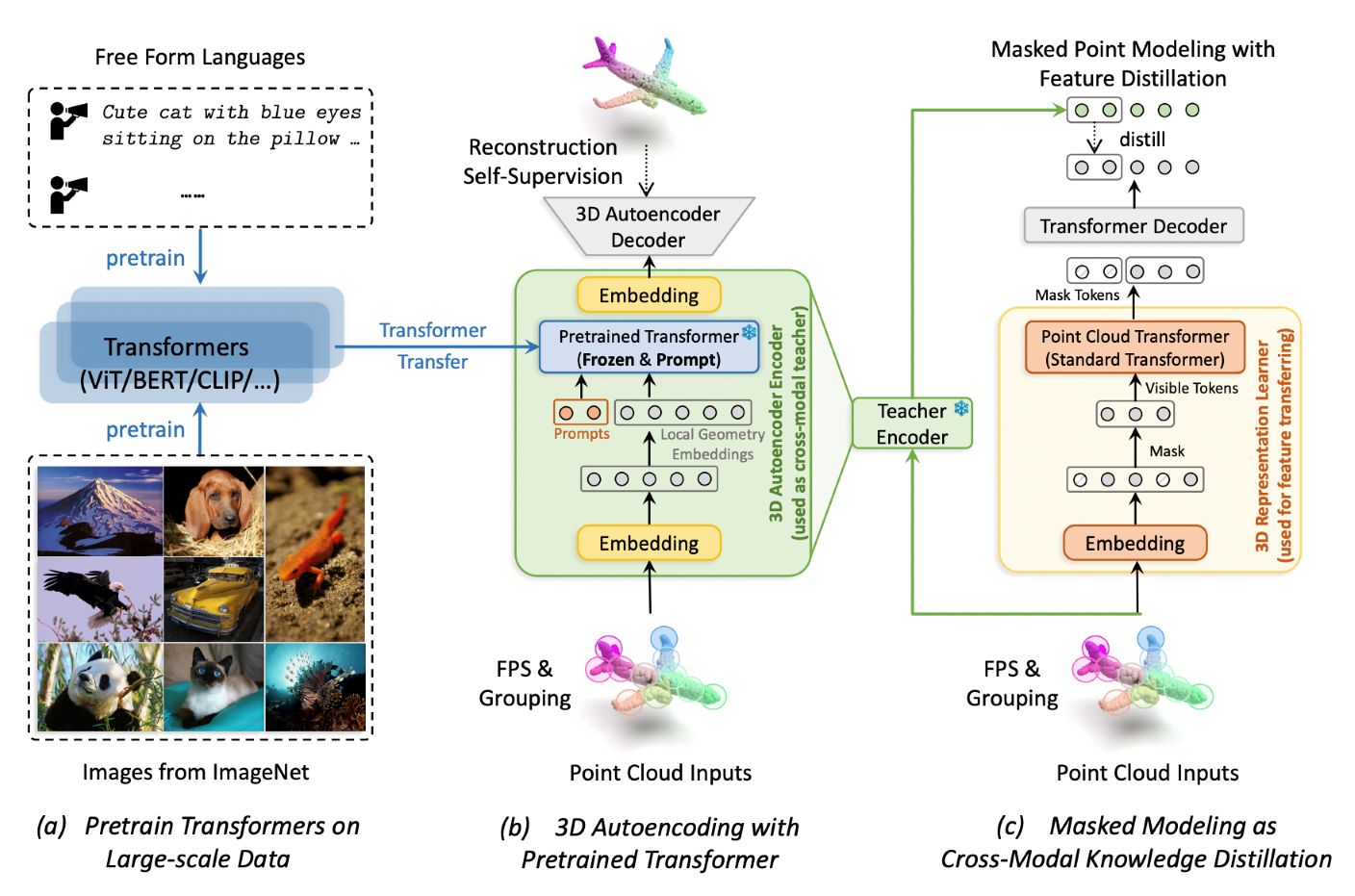

Zekun Qi*, Runpei Dong*, Guofan Fan, Zheng Ge, Xiangyu Zhang, Kaisheng Ma, Li Yi International Conference on Machine Learning (ICML), 2023 [arXiv] [Code] [OpenReview] We propose contrast guided by reconstruct to mitigate the pattern differences between two self-supervised paradigms. |

|

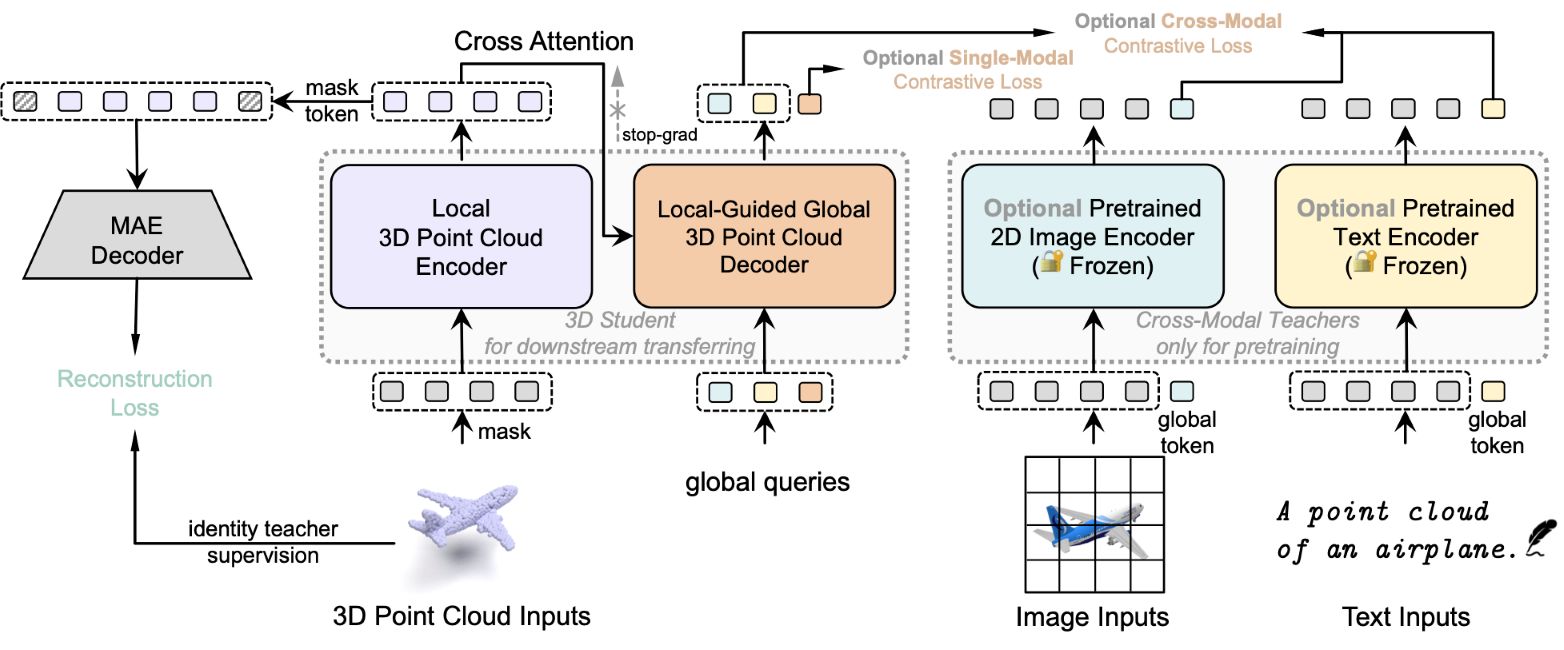

Runpei Dong, Zekun Qi, Linfeng Zhang, Junbo Zhang, Jianjian Sun, Zheng Ge, Li Yi, Kaisheng Ma International Conference on Learning Representations (ICLR), 2023 [arXiv] [Code] [OpenReview] We propose to use autoencoders as cross-modal teachers to transfer dark knowledge into 3D representation learning. |